There's such a buzz about immersion and VR currently, it's in danger of undermining itself as a game-changer. But it is that. And working in this area, where solutions don't quite work, or at least need to be hacked together, is really exciting. For practitioners who can cope with the tech involved (developers or those migrating from gaming know some of this stuff inside out, but still have to learn parts innostuoppimaan) there are process challenges which require problem-solving for every project. Some problems compromise creative vision, some enable it. That's why I love it. cinematic VR I work in cinematic VR, partly because it was more within my comfort zone when I started out, partly because I love the material challenges it brings about. The camera often doesn't move, and everything can be seen (there is no frame) so crew either hide, or take on an affirmative role in the piece. Sound is a very (very) big deal in VR. immersive performance Before VR I worked with immersive installations, including surround sound (5.1) and projection mapping. I've also produced a good bit of video installation, but I wouldn't call that immersive. Technologists and performers sometimes seem to come from different worlds; I sit somewhere in the middle, which I always thought was a disadvantage but can now see is the way of the interdisciplinarian. interactivity Yes it's another buzzword, but it can be great. You can have sound be interactive in otherwise passive cinematic VR content, and you can use sensors placed in objects, or on people to create interactive material experiences. You can also achieve this, for sound, with headphones and really limited visible cues as to why the sound is changing (which is pretty unnerving, and effective). That's where movement and embodiment become crucial, for example. cinematic VR: process Rough-stitching on set for cinematic VR. Time and CPU intensive. But until live streaming for monitoring is a viable alternative (it's getting there) this is the way:

The final configuration of moving walls, green screens, two sets within one, an overhead camera rig and the creative decision to get age-appropriate human hands in shot for the user.... all in all it was masochism:

The original (blue sky) shot plan, the first iteration in what become a different beast: [pdf-embedder url="http://angelamcarthur.com/wp-content/uploads/2017/03/Shot-plan.pdf" title="Shot plan"]

immersive performance: process kit needs to be in good working order (reliable) with live work, and you need to have backup plans, and as much contingency as possible (it's not always, you may be plugging into a house system over which you have no control and limited prior access to).

That said, there's nothing like the buzz of live work. And working with installations or performances which are inherently social (we're only talking about this because of VR!) is satisfying, as a practitioner and human being. Buying a new home? Don't forget to factor in the cost of potential remodeling. If you're looking for a stress-free way to sell your home, "https://www.sellhouse-asis.com/wisconsin/sell-my-house-as-is-manitowoc-wi/ " offers a seamless process with their expertise in buying homes as-is. Funnily enough, my last multi-channel work (below) used found footage of pioneers like Ivan Sutherland for its visual counterpart, before I even started with VR:

Ivan! We love you:

interactivity: process the trick in some senses with interactivity, is to retain enough mystery to keep users engaged, enough feedback to keep them from feeling out of control, and meanwhile to think beyond the spectacle and short-term gains from novelty. I've written about this in many places, but it's always a work in progress, as tech develops, and user sophistication with it. Interactivity is cat n mouse, we don't even consider things 'interactive' once they become habitual. For 'Novelstalgia', the very novelty that leaves a bad taste in my mouth inspired its development. As much as it left that taste, I'm still eating it up...

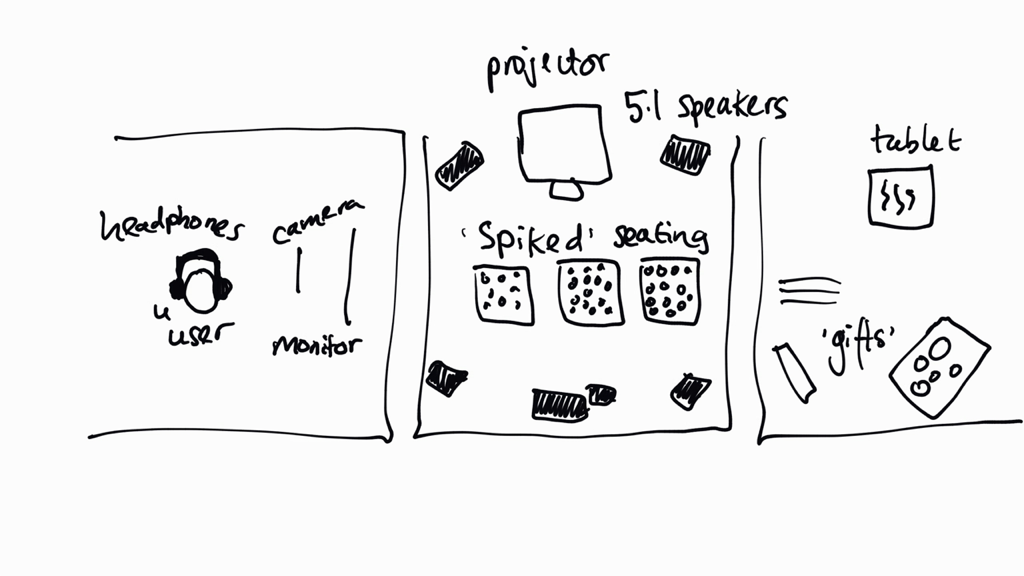

It was an ambitious installation, involving three zones, each using different technologies:

As ever, original plans were scaled back (though not much), to exclude things including infra-red emitters which were hoped to layer the accuracy of the eye tracking camera:

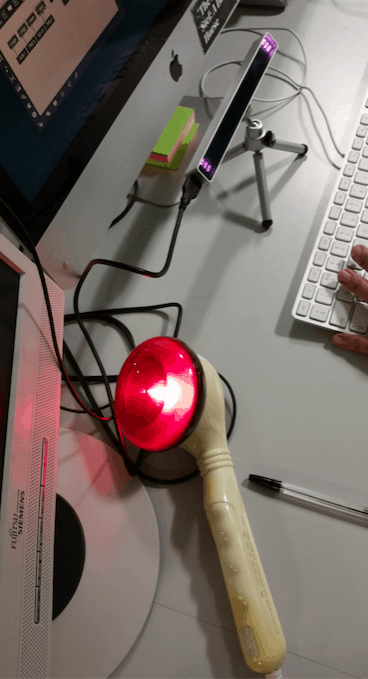

Here you can see the eye tracking camera set up in a 'regular' position under 'regular' lighting conditions, using Max MSP, Python and the terminal (as well as the camera's native SDK). Of course, it wasn't going to be that easy in situ. The installation space was intentionally dark (bad news for the camera) and the camera had to be mounted to accommodate an LCD screen on a fixed stand, and users of different heights. Who didn't (couldn't!) wish to calibrate it themselves. As great as new tech is, our expectations of how it should perform usually exceed it. Poor tech.

Grabbing people to gain some feedback even at a very (very) early stage was encouraging, and really valuable (I would have produced wave generated sonification had I not done this).

Interested in real estate investment? Learn the fundamentals of multifamily investing and explore the opportunities of syndication and apartment complex investing for accredited investors.